|

Mail | LinkedIn | Google Scholar | GitHub | CV Last updated: September 2025 I am an ELLIS PhD candidate at the University of Amsterdam in the Machine Learning Lab, jointly supervised by Eric Nalisnick (from Johns Hopkins) and Christian Naesseth. I am part of the Delta Lab, a research collaboration with the Bosch Center for Artificial Intelligence. In that context, I am also advised by Bosch research scientists Christoph-Nikolas Straehle and Kaspar Sakmann. My research interests focus on principled and efficient uncertainty quantification for deep learning, with the aim of reliable model reasoning and decision-making. Primarily, this involves probabilistic modelling via statistical frameworks such as conformal prediction, risk control or e-values, but also Bayesian principles. I am also interested in connections to other notions of model reliability such as calibration and forecaster scoring, robustness and generalization, or interpretability. Applications of interest include computer vision, time series, and online or stream settings. I graduated with an MSc in Statistics from ETH Zurich, specialising in machine learning and computational statistics. My master thesis with the MIE Lab was on uncertainty quantification in traffic prediction (see here). I obtained a BSc in Industrial Engineering from the Karlsruhe Institute of Technology (KIT), focusing on statistics and finance. |

|

|

|

|

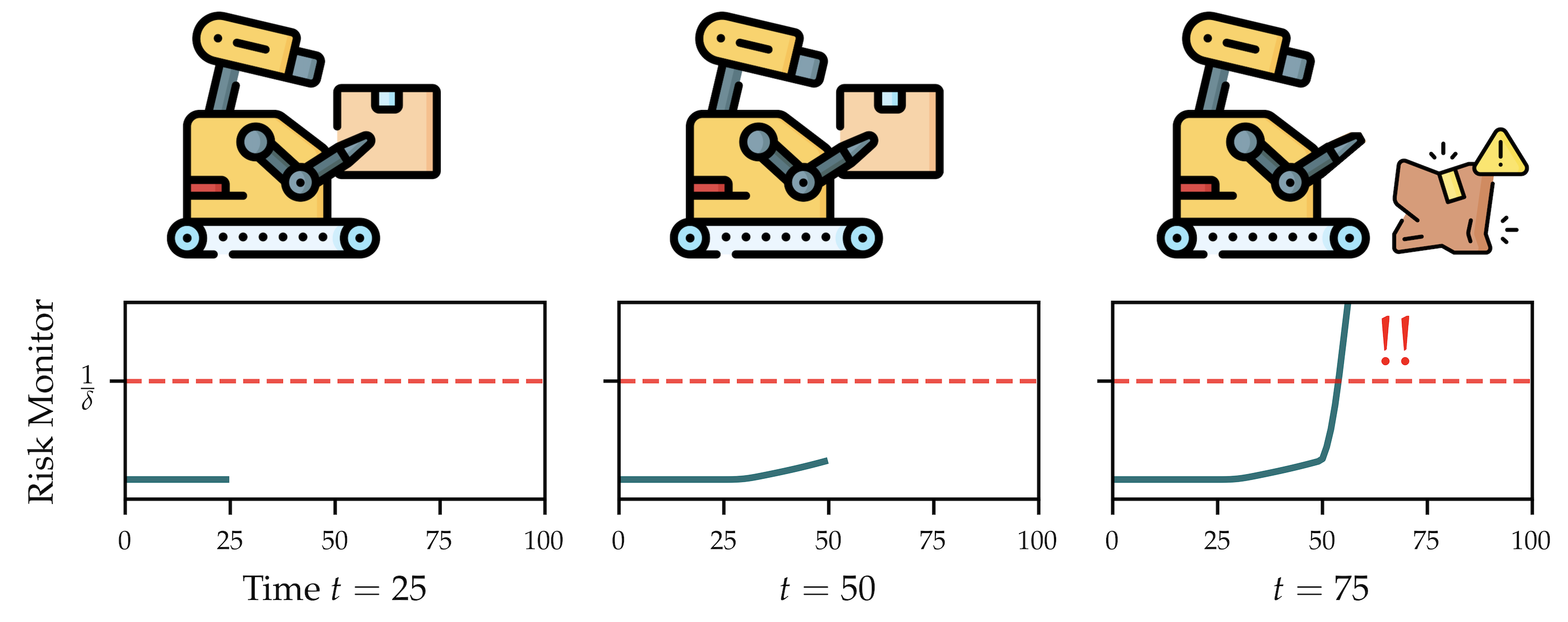

Alexander Timans*, Rajeev Verma*, Eric Nalisnick, Christian Naesseth Conference on Uncertainty in Artifical Intelligence (UAI), 2025 * Equal contribution Links: Paper | Code | Poster We propose continuous risk monitoring of deployed models via a sequential hypothesis testing framework. The approach poses no assumptions on the incoming data stream, and has some nice statistical properties including false alarm guarantees. |

|

|

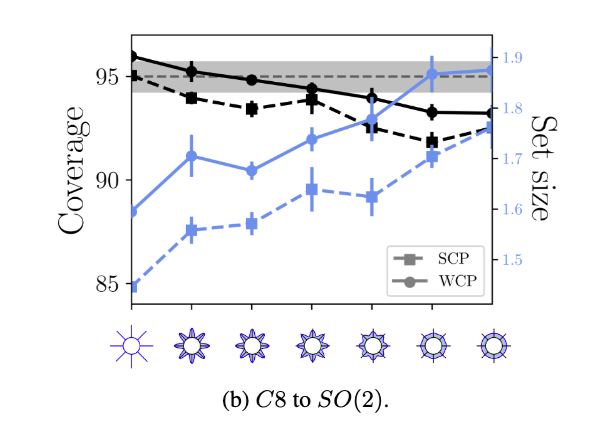

Putri van der Linden, Alexander Timans, Erik Bekkers Conference on Uncertainty in Artifical Intelligence (UAI), 2025 Links: Paper | Code | Poster We explore the unlikely intersection of conformal prediction and geometric deep learning, and show how geometric information can be incorporated into various conformal procedures to render them practical again under different corruption settings. |

|

|

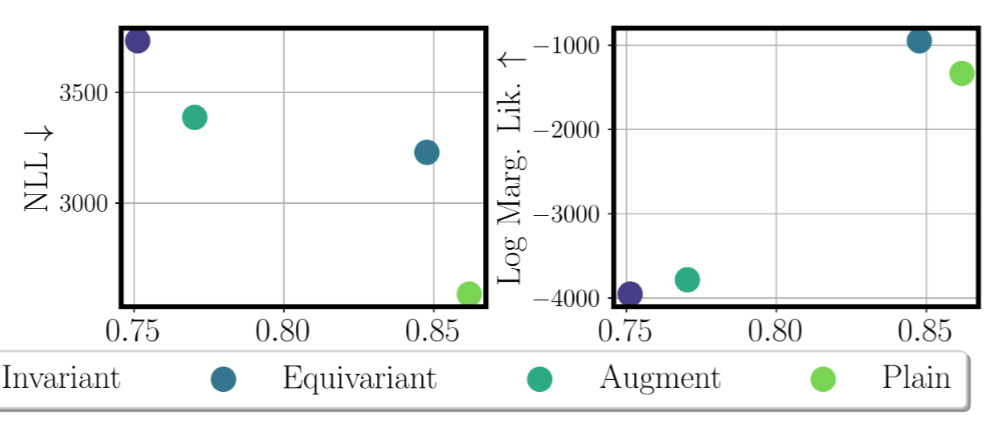

Putri van der Linden, Alexander Timans, Dharmesh Tailor, Erik Bekkers UAI Workshop on Tractable Probabilistic Modeling, 2025 Links: Paper | Code | Poster We evaluate how different uncertainty measures may inform equivariant model selection as an alternative to standard error comparison. We point towards some mismatches between the marginal likelihood and geometric constraints, but more investigation is needed. |

|

|

Alexander Timans, Christoph-Nikolas Straehle, Kaspar Sakmann, Eric Nalisnick Int'l Conference on Artifical Intelligence and Statistics (AISTATS), 2025 Links: Paper | Code | Poster We propose |

|

|

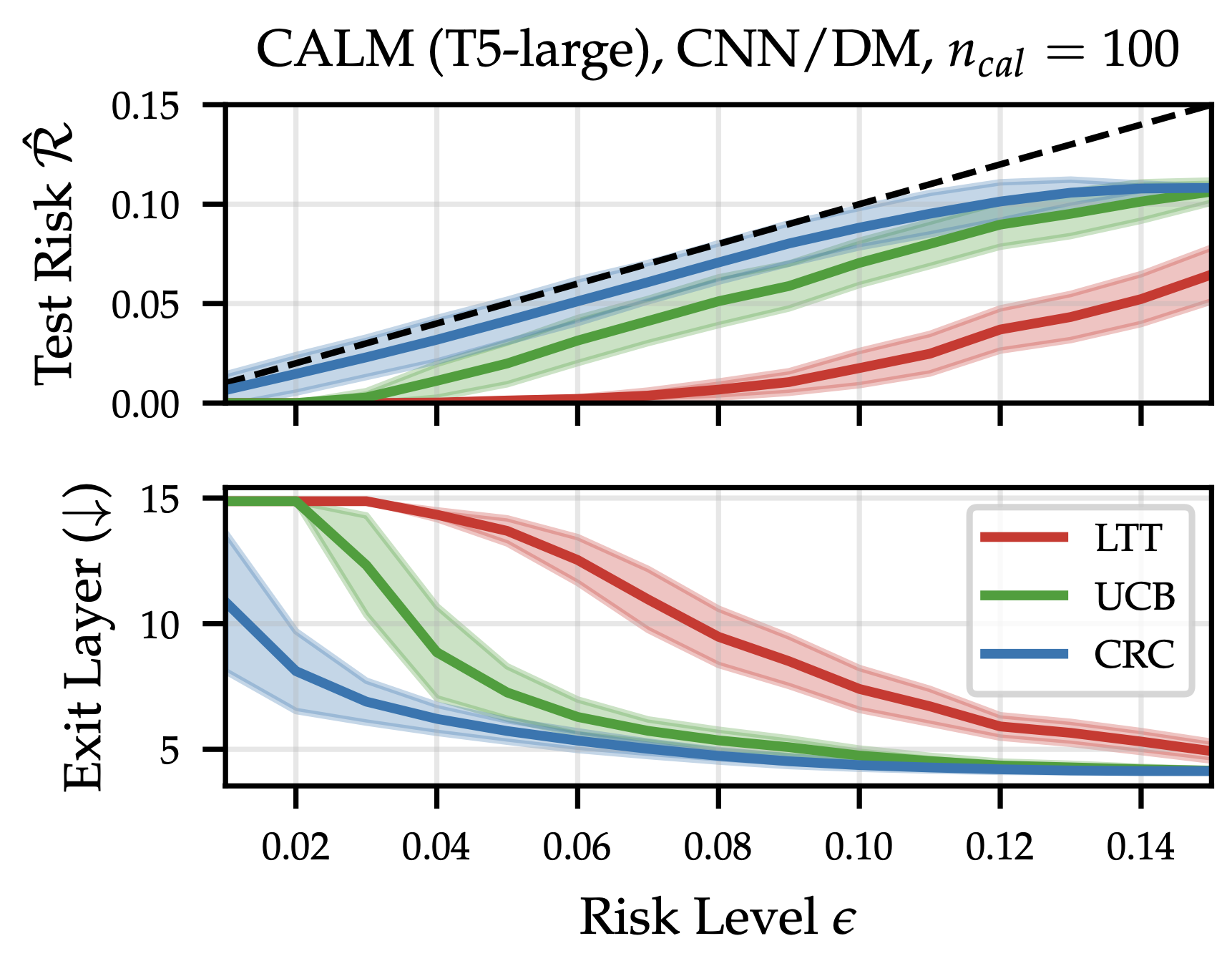

Metod Jazbec*, Alexander Timans*, Tin Hadzi Veljkovic, Kaspar Sakmann, Dan Zhang, Christian Naesseth, Eric Nalisnick Neural Information Processing Systems (NeurIPS), 2024 Also in: ICML Workshops on Structured Probabilistic Inference and Generative Modelling & Efficient Systems for Foundation Models * Equal contribution Links: Paper | Code | Poster We investigate how to adapt frameworks of risk control to early-exit neural networks, providing a distribution-free, post-hoc solution that tunes the EENN’s exiting mechanism so that exits only occur when the output is guaranteed to satisfy user-specified performance goals. |

|

|

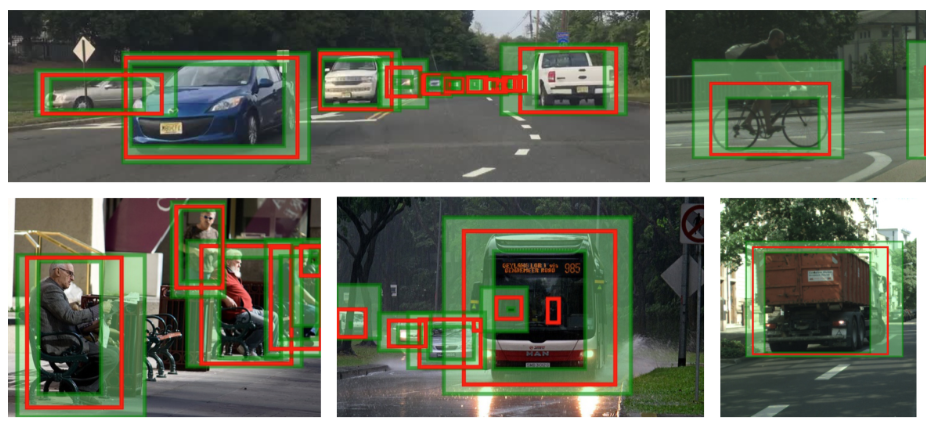

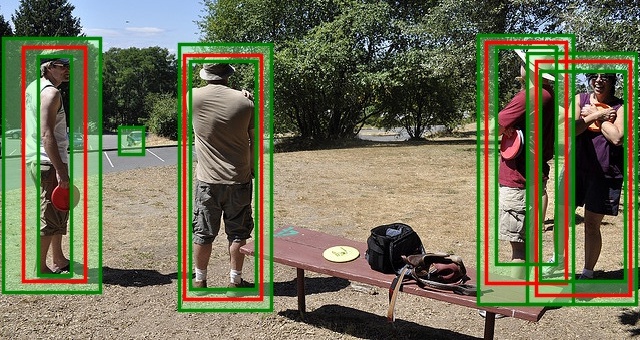

Alexander Timans, Christoph-Nikolas Straehle, Kaspar Sakmann, Eric Nalisnick European Conference on Computer Vision (ECCV) [Oral], 2024 Also in: ECCV Workshop on Uncertainty Quantification for CV Links: Paper | Code | Poster We propose a two-step conformal approach that propagates uncertainty in predicted class labels into the uncertainty intervals of bounding boxes. This broadens the validity of conformal coverage guarantees to include incorrectly classified objects. This work substantially extends our workshop paper. |

|

|

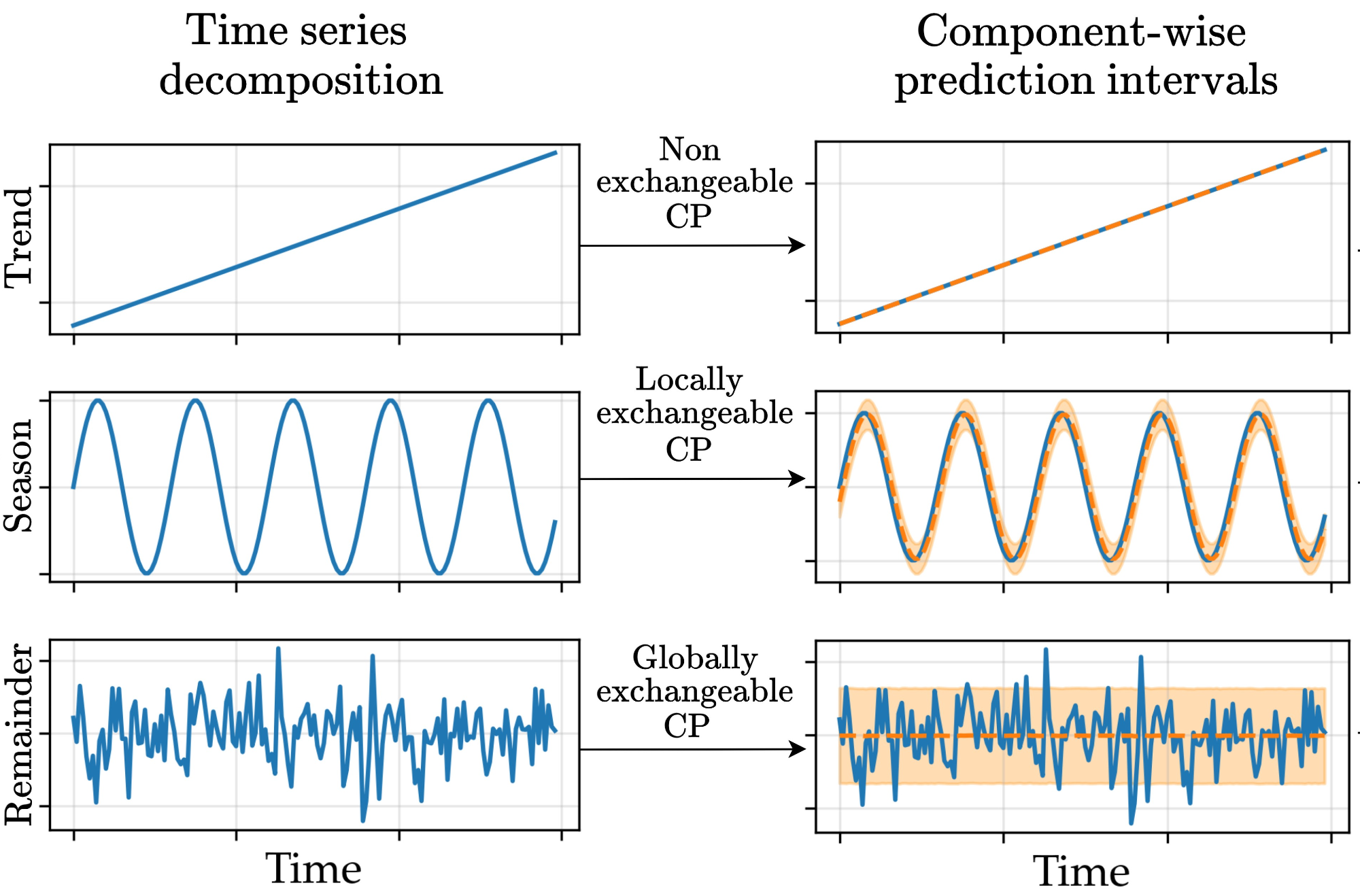

Derck Prinzhorn, Thijmen Nijdam, Putri van der Linden, Alexander Timans Conformal and Probabilistic Prediction with Applications (PLMR), 2024 Links: Paper | Code We present a novel use of conformal prediction for time series forecasting that incorporates time series decomposition, allowing us to customize employed methods to account for the different exchangeability regimes underlying each time series component. |

|

|

Alexander Timans, Christoph-Nikolas Straehle, Kaspar Sakmann, Eric Nalisnick ICCV Workshop on Uncertainty Quantification for CV, 2023 Links: Paper We quantify the uncertainty in multi-object 2D bounding box predictions via conformal prediction, producing tight prediction intervals with guaranteed per-class coverage for bounding boxes. |

|

|

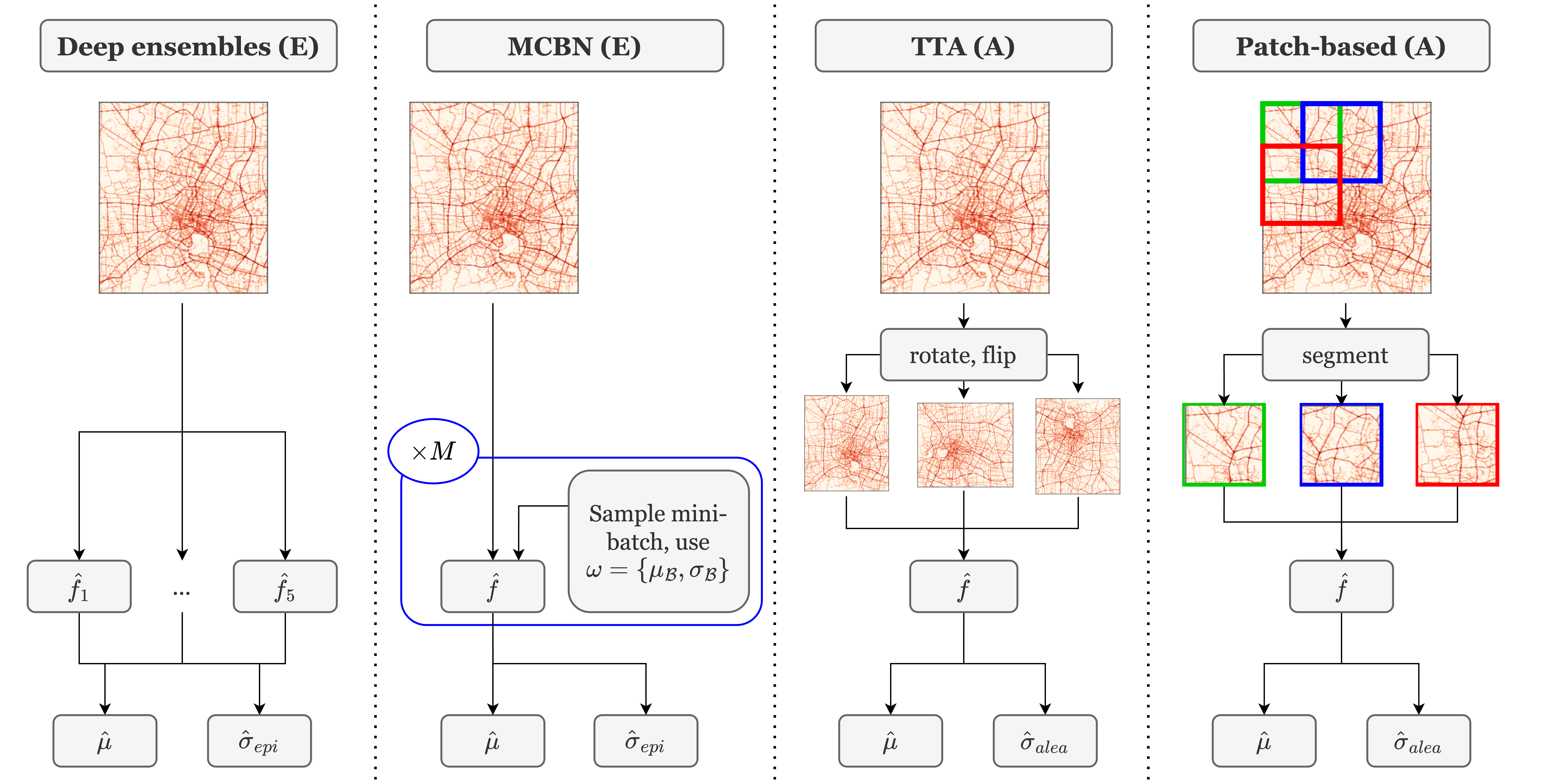

Alexander Timans, Nina Wiedemann, Nishant Kumar, Ye Hong, Martin Raubal Master Thesis (arXiv), 2023 Links: Paper | Code We explore different uncertainty quantification methods on a large-scale image-based traffic dataset spanning multiple cities and time periods, originally featured as a NeurIPS 2021 prediction challenge. Meaningful uncertainty relating to traffic dynamics is recovered by a combination method of deep ensembles and patch-based deviations. In a case study, we demonstrate how uncertainty estimates can be employed for unsupervised outlier detection on traffic behaviour. |

|

- Reviewing: AISTATS 2026, AAAI 2026, NeurIPS 2025, TMLR 2025, ICML 2025, AISTATS 2025, ICLR 2025, NeurIPS 2024 (Top Reviewer), ICCV 2023

- Teaching & Supervision: Dominykas Šeputis (MSc Thesis / UvA), Alejandro Muñoz (MSc Thesis / UvA), Jesse Brouwers (MSc Thesis / UvA), Project AI (MSc / UvA), Human-in-the-loop ML (MSc / UvA), Introduction to ML (BSc / UvA), Derck Prinzhorn (BSc Thesis / UvA, Thesis award), Deep Learning 2 (MSc / UvA), ANOVA (MSc / ETH), Econometrics (BSc / KIT)

- Striving for some sense of work-life balance, creative outlets, and all things bikes.

|

Source: adapted from Dharmesh Tailor's fork of Leonid Keselman's fork of John Barron's website. |